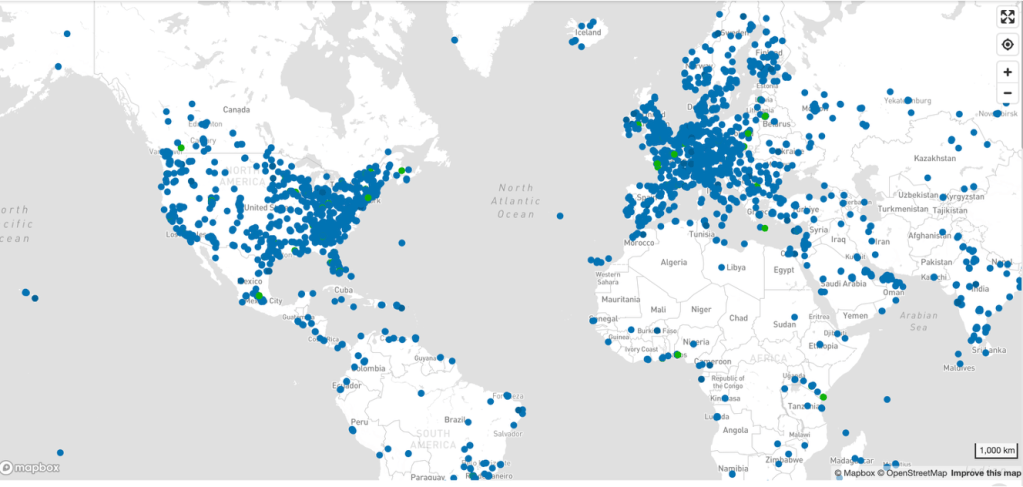

A grass‑roots “data center and electric grid rebellion” is emerging across the United States as communities push back against the local consequences of AI‑driven infrastructure expansion. Residents are increasingly challenging large‑scale data centers and the transmission lines needed to power them, citing concerns about enormous electricity demand, water consumption, noise pollution, land use, declining property values, and opaque approval processes. What were once routine zoning or utility hearings are now crowded, contentious events, with citizens organizing quickly and sharing strategies across counties and states.

This opposition is no longer ad hoc. In Northern Virginia—often described as the global epicenter of data centers—organized campaigns such as the Coalition to Protect Prince William County have mobilized voters, fundraised for local elections, demanded zoning changes, and challenged approvals in court. In Maryland’s Prince George’s County, resistance has taken on a strong environmental‑justice framing, with groups like the South County Environmental Justice Coalition arguing that data centers concentrate environmental and energy burdens in historically marginalized communities and calling for moratoria and stronger safeguards.

Nationally, consumer and civic groups are increasingly coordinated, using shared data, mapping tools, and media pressure to argue that unchecked data‑center growth threatens grid reliability and shifts costs onto ratepayers. Together, these campaigns signal a broader political reckoning over who bears the costs of the AI economy.

Here’s a snapshot of grass roots opposition in Texas, Louisiana and Nevada:

Texas

Texas has some of the most active and durable local opposition, driven by land use, water, and transmission corridors.

- Hill Country & Central Texas (Burnet, Llano, Gillespie, Blanco Counties)

Grass-roots groups formed initially around high-voltage transmission lines (765 kV) tied to load growth, now explicitly linking those lines to data center demand. Campaigns emphasize:- rural land fragmentation

- wildfire risk

- eminent domain abuse

- lack of local benefit

These groups are often informal coalitions of landowners rather than NGOs, but they coordinate testimony, public-records requests, and local elections.

- DFW & North Texas

Neighborhood associations opposing rezoning for hyperscale facilities focus on noise (backup generators), property values, and school-district tax distortions created by data-center abatements. - ERCOT framing

Texas groups uniquely argue that data centers are socializing grid instability risk onto residential ratepayers while privatizing upside—an argument that resonates with conservative voters.

Louisiana

Opposition is newer but coalescing rapidly, often tied to petrochemical and LNG resistance networks.

- North Louisiana & Mississippi River Corridor

Community groups opposing new data centers frame them as:- “energy parasites” tied to gas plants

- extensions of an already overburdened industrial corridor

- threats to water tables and wetlands

Organizers often overlap with environmental-justice and faith-based coalitions that previously fought refineries and export terminals.

- Key tactic: reframing data centers as industrial facilities, not “tech,” triggering stricter land-use scrutiny.

Nevada

Nevada opposition centers on water scarcity and public-land use.

- Clark County & Northern Nevada

Residents and conservation groups question:- water allocations for evaporative cooling

- siting near public or BLM-managed land

- grid upgrades subsidized by ratepayers for private AI firms

- Distinct Nevada argument: data centers compete directly with housing and tribal water needs, not just environmental values.

The Data Center Rebellion is Here and It’s Reshaping the Political Landscape (Washington Post)

Residents protest high-voltage power lines that could skirt Dinosaur Valley State Park (ALEJANDRA MARTINEZ AND PAUL COBLER/Texas Tribune)

US Communities Halt $64B Data Center Expansions Amid Backlash (Lucas Greene/WebProNews)

Big Tech’s fast-expanding plans for data centers are running into stiff community opposition (Marc Levy/Associated Press)

Data center ‘gold rush’ pits local officials’ hunt for new revenue against residents’ concerns (Alander Rocha/Georgia Record)