It gets rid of your gambling debts

It quits smoking

It’s a friend, it’s a companion

It’s the only product you will ever need

From Step Right Up, written by Tom Waits

The AI “frontier labs” — OpenAI, Anthropic, DeepMind, xAI, and their constellation of evangelists — often present themselves as the high priests of a coming digital transcendence. This is sometimes called “the singularity” which refers to a hypothetical future point when artificial intelligence surpasses human intelligence, triggering rapid, unpredictable technological growth. Often associated with self-improving AI, it implies a transformation of society, consciousness, and control, where human decision-making may be outpaced or rendered obsolete by machines operating beyond our comprehension.

But viewed through the lens of social psychology, the AI evangelists increasingly resembles that of cognitive dissonance cults, as famously documented in Dr. Leon Festinger and team’s important study of a UFO cult (a la Heaven’s Gate), When Prophecy Fails. (See also The Great Disappointment.)

In that social psychology foundational study, a group of believers centered around a woman named “Marian Keech” predicted the world would end in a cataclysmic flood, only to be rescued by alien beings — but when the prophecy failed, they doubled down. Rather than abandoning their beliefs, the group rationalized the outcome (“We were spared because of our faith”) and became even more committed. They get this self-hypnotized look, kind of like this guy (and remember-this is what the Meta marketing people thought was the flagship spot for Meta’s entire superintelligence hustle):

This same psychosis permeates Singularity narratives and the AI doom/alignment discourse:

– The world is about to end — not by water, but by unaligned superintelligence.

– A chosen few (frontier labs) hold the secret knowledge to prevent this.

– The public must trust them to build, contain, and govern the very thing they fear.

– And if the predicted catastrophe doesn’t come, they’ll say it was their vigilance that saved us.

Like cultic prophecy, the Singularity promises transformation:

– Total liberation or annihilation (including liberation from annihilation by the Red Menace, i.e., the Chinese Communist Party).

– A timeline (“AGI by 2027”, “everything will change in 18 months”).

– An elite in-group with special knowledge and “Don’t be evil” moral responsibility.

– A strict hierarchy of belief and loyalty — criticism is heresy, delay is betrayal.

This serves multiple purposes:

1. Maintains funding and prestige by positioning the labs as indispensable moral actors.

2. Deflects criticism of copyright infringement, resource consumption, or labor abuse with existential urgency (because China, don’t you know).

3. Converts external threats (like regulation) into internal persecution, reinforcing group solidarity.

The rhetoric of “you don’t understand how serious this is” mirrors cult defenses exactly.

Here’s the rub: the timeline keeps slipping. Every six months, we’re told the leap to “godlike AI” is imminent. GPT‑4 was supposed to upend everything. That didn’t happen, so GPT‑5 will do it for real. Gemini flopped, but Claude 3 might still be the one.

When prophecy fails, they don’t admit error — they revise the story:

– “AI keeps accelerating”

– “It’s a slow takeoff, not a fast one.”

– “We stopped the bad outcomes by acting early.”

– “The doom is still coming — just not yet.”

Leon Festinger’s theories seen in When Prophecy Fails, especially cognitive dissonance and social comparison, influence AI by shaping how systems model human behavior, resolve conflicting inputs, and simulate decision-making. His work guides developers of interactive agents, recommender systems, and behavioral algorithms that aim to mimic or respond to human inconsistencies, biases, and belief formation. So this isn’t a casual connection.

As with Festinger’s study, the failure of predictions intensifies belief rather than weakening it. And the deeper the believer’s personal investment, the harder it is to turn back. For many AI cultists, this includes financial incentives, status, and identity.

Unlike spiritual cults, AI frontier labs have material outcomes tied to their prophecy:

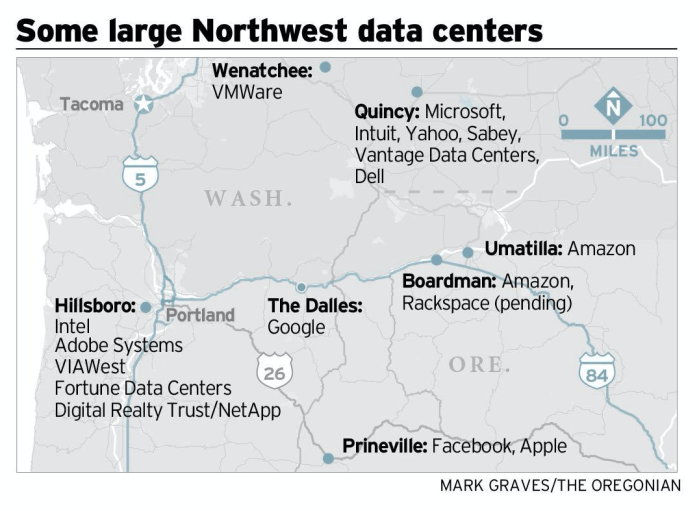

– Federal land allocations, as we’ve seen with DOE site handovers.

– Regulatory exemptions, by presenting themselves as saviors.

– Massive capital investment, driven by the promise of world-changing returns.

In the case of AI, this is not just belief — it’s belief weaponized to secure public assets, shape global policy, and monopolize technological futures. And when the same people build the bomb, sell the bunker, and write the evacuation plan, it’s not spiritual salvation — it’s capture.

The pressure to sustain the AI prophecy—that artificial intelligence will revolutionize everything—is unprecedented because the financial stakes are enormous. Trillions of dollars in market valuation, venture capital, and government subsidies now hinge on belief in AI’s inevitable dominance. Unlike past tech booms, today’s AI narrative is not just speculative; it is embedded in infrastructure planning, defense strategy, and global trade. This creates systemic incentives to ignore risks, downplay limitations, and dismiss ethical concerns. To question the prophecy is to threaten entire business models and geopolitical agendas. As with any ideology backed by capital, maintaining belief becomes more important than truth.

The Singularity, as sold by the frontier labs, is not just a future hypothesis — it’s a living ideology. And like the apocalyptic cults before them, these institutions demand public faith, offer no accountability, and position themselves as both priesthood and god.

If we want a secular, democratic future for AI, we must stop treating these frontier labs as prophets — and start treating them as power centers subject to scrutiny, not salvation.