Uncle Sugar’s “National Emergency” Pitch to Congress

At a recent Congressional hearing, former Google CEO Eric “Uncle Sugar” Schmidt delivered a message that was as jingoistic as it was revealing: if America wants to win the AI arms race, it better start building power plants. Fast. But the subtext was even clearer—he expects the taxpayer to foot the bill because, you know, the Chinese Communist Party. Yes, when it comes to fighting the Red Menace, the all-American boys in Silicon Valley will stand ready to fight to the last Ukrainian, or Taiwanese, or even Texan.

Testifying before the House Energy & Commerce Committee on April 9, Schmidt warned that AI’s natural limit isn’t chips—it’s electricity. He projected that the U.S. would need 92 gigawatts of new generation capacity—the equivalent of nearly 100 nuclear reactors—to keep up with AI demand.

Schmidt didn’t propose that Google, OpenAI, Meta, or Microsoft pay for this themselves, just like they didn’t pay for broadband penetration. No, Uncle Sugar pushed for permitting reform, federal subsidies, and government-driven buildouts of new energy infrastructure. In plain English? He wants the public sector to do the hard and expensive work of generating the electricity that Big Tech will profit from.

Will this Improve the Grid?

And let’s not forget: the U.S. electric grid is already dangerously fragile. It’s aging, fragmented, and increasingly vulnerable to cyberattacks, electromagnetic pulse (EMP) weapons, and even extreme weather events. Pouring public money into ultra-centralized AI data infrastructure—without first securing the grid itself—is like building a mansion on a cracked foundation.

If we are going to incur public debt, we should prioritize resilience, distributed energy, grid security, and community-level reliability—not a gold-plated private infrastructure buildout for companies that already have trillion-dollar valuations.

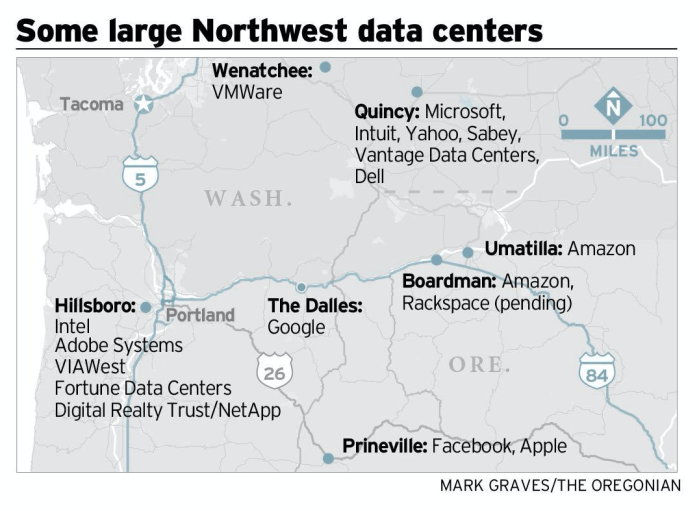

Big Tech’s Growing Appetite—and Private Hoarding

This isn’t just a future problem. The data center buildout is already in full swing and your Uncle Sugar must be getting nervous about where he’s going to get the money from to run his AI and his autonomous drone weapons. In Oregon, where electricity is famously cheap thanks to the Bonneville Power Administration’s hydroelectric dams on the Columbia River, tech companies have quietly snapped up huge portions of the grid’s output. What was once a shared public benefit—affordable, renewable power—is now being monopolized by AI compute farms whose profits leave the region to the bank accounts in Silicon Valley.

Meanwhile, Microsoft is investing in a nuclear-powered data center next to the defunct Three Mile Island reactor—but again, it’s not about public benefit. It’s about keeping Azure’s training workloads running 24/7. And don’t expect them to share any of that power capacity with the public—or even with neighboring hospitals, schools, or communities.

Letting the Public Build Private Fortresses

The real play here isn’t just to use public power—it’s to get the public to build the power infrastructure, and then seal it off for proprietary use. Moats work both ways.

That includes:

– Publicly funded transmission lines across hundreds of miles to deliver power to remote server farms;

– Publicly subsidized generation capacity (nuclear, gas, solar, hydro—you name it);

– And potentially, prioritized access to the grid that lets AI workloads run while the rest of us face rolling blackouts during heatwaves.

All while tech giants don’t share their models, don’t open their training data, and don’t make their outputs public goods. It’s a privatized extractive model, powered by your tax dollars.

Been Burning for Decades

Don’t forget: Google and YouTube have already been burning massive amounts of electricity for 20 years. It didn’t start with ChatGPT or Gemini. Serving billions of search queries, video streams, and cloud storage events every day requires a permanent baseload—yet somehow this sudden “AI emergency” is being treated like a surprise, as if nobody saw it coming.

If they knew this was coming (and they did), why didn’t they build the power? Why didn’t they plan for sustainability? Why is the public now being told it’s our job to fix their bottleneck?

The Cold War Analogy—Flipped on Its Head

Some industry advocates argue that breaking up Big Tech or slowing AI infrastructure would be like disarming during a new Cold War with China. But Gail Slater, the Assistant Attorney General leading the DOJ’s Antitrust Division, pushed back forcefully—not at a hearing, but on the War Room podcast.

In that interview, Slater recalled how AT&T tried to frame its 1980s breakup as a national security threat, arguing it would hurt America’s Cold War posture. But the DOJ did it anyway—and it led to an explosion of innovation in wireless technology.

“AT&T said, ‘You can’t do this. We are a national champion. We are critical to this country’s success. We will lose the Cold War if you break up AT&T,’ in so many words. … Even so, [the DOJ] moved forward … America didn’t lose the Cold War, and … from that breakup came a lot of competition and innovation.”

“I learned that in order to compete against China, we need to be in all these global races the American way. And what I mean by that is we’ll never beat China by becoming more like China. China has national champions, they have a controlled economy, et cetera, et cetera.

We win all these races and history has taught by our free market system, by letting the ball rip, by letting companies compete, by innovating one another. And the reason why antitrust matters to that picture, to the free market system is because we’re the cop on the beat at the end of the day. We step in when competition is not working and we ensure that markets remain competitive.”

Slater’s message was clear: regulation and competition enforcement are not threats to national strength—they’re prerequisites to it. So there’s no way that the richest corporations in commercial history should be subsidized by the American taxpayer.

Bottom Line: It’s Public Risk, Private Reward

Let’s be clear:

– They want the public to bear the cost of new electricity generation.

– They want the public to underwrite transmission lines.

– They want the public to streamline regulatory hurdles.

– And they plan to privatize the upside, lock down the infrastructure, keep their models secret and socialize the investment risk.

This isn’t a public-private partnership. It’s a one-way extraction scheme. America needs a serious conversation about energy—but it shouldn’t begin with asking taxpayers to bail out the richest companies in commercial history.