In case you missed it, Spotify has apparently been training its own music AI that should allow them to capture some of the AI hype on Wall Street. But it brings back seem bad memories.

There was a time when the music business had a simple rule: “We will never let another MTV build a business on our backs”. That philosophy arose from watching the arbitrage as value created by artists was extracted by platforms that had nothing to do with creating it. That spectacle shaped the industry’s deep reluctance to license digital music in the early years of the internet. “Never” was supposed to mean never.

I took them at their word.

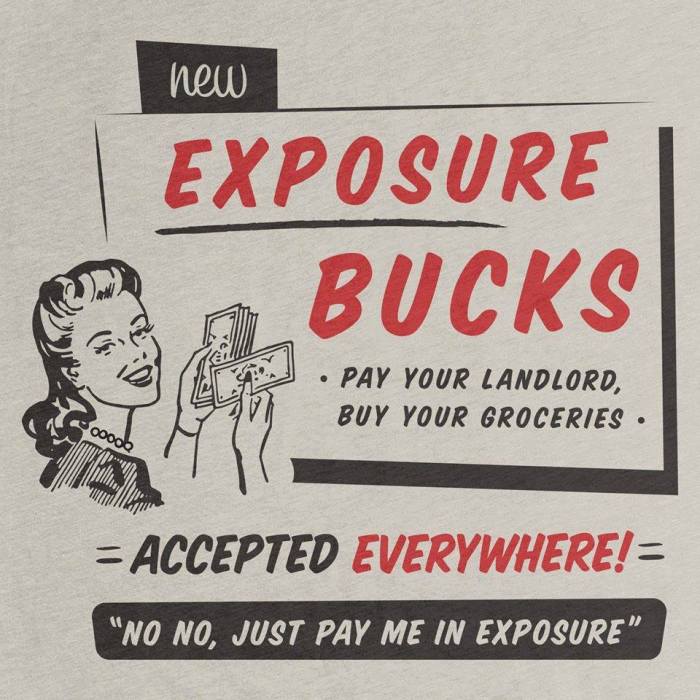

But of course, “never” turned out to be conditional. The industry made exception after exception until the rule dissolved entirely. First came the absurd statutory shortcut of the DMCA safe harbor era. Then YouTube. Then iTunes. Then Spotify. Then Twitter and Facebook, social media. Then TikTok. Each time, platforms were allowed to scale first and renegotiate later (and Twitter still hasn’t paid). Each time, the price of admission for the platform was astonishingly low compared to the value extracted from music and musicians. In many cases, astonishingly low compared to their current market value in businesses that are totally dependent on creatives. (You could probably put Amazon in that category.)

Some of those deals came wrapped in what looked, at the time, like meaningful compensation — headline-grabbing advances and what were described as “equity participation.” In reality, those advances were finite and the equity was often a thin sliver, while the long-term effect was to commoditize artist royalties and shift durable value toward the platforms. That is one reason so many artists came to resent and in many cases openly despise Spotify and the “big pool” model. All the while being told how transformative Spotify’s algorithm is without explaining how the wonderful algorithm misses 80% of the music on the platform.

And now we arrive at the latest collapse of “never”: Spotify’s announcement that it is developing its own music AI and derivative-generation tools.

If you disliked Spotify before, you may loathe what comes next.

This moment is different — but in many ways it is the same fundamental problem MTV created. Artists and labels provided the core asset — their recordings — for free or nearly free, and the platform built a powerful business by packaging that value and selling it back to them. Distribution monetized access to music; AI monetizes the music itself.

According to Music Business Worldwide:

Spotify’s framing appears to offer something of a middle ground. [New CEO] Söderström is not arguing for open distribution of AI derivatives across the internet. Instead, he’s positioning Spotify as the platform where this interaction should happen – where the fans, the royalty pool, and the technology already exist.

Right, our fans and his pathetic “royalty pool.” And this is supposed to make us like you?

The Training Gap

Which brings us to the question Spotify has not answered — the question that matters more than any feature announcement or product demo:

What did they train on?

Was it Epidemic Sound? Was it licensed catalog? Public domain recordings? User uploads? Pirated material?

All are equally possible.

But far more likely to me: Did Spotify train on the recordings licensed for streaming and Spotify’s own platform user data derived from the fans we drove to their service — quietly accumulated, normalized, and ingested into AI over years?

Spotify has not said.

And that silence matters.

The Transparency Gap

Creators currently have no meaningful visibility into whether their work has already been absorbed into Spotify’s generative systems. No disclosure. No audit trail. No licensing registry. No opt-in structure. No compensation framework. The unknowns are not theoretical — they are structural:

- Were your recordings used for training?

- Do your performances now exist inside model weights?

- Was consent ever obtained?

- Was compensation ever contemplated?

- Can outputs reproduce protected expression derived from your work?

If Spotify trained on catalog licensed to them for an entirely different purpose without explicit, informed permission from rights holders and performers, then AI derivatives are not merely a new feature. They are a massively infringing second layer of value extraction built on top of the first exploitation — the original recordings that creators already struggled to monetize fairly.

This is not innovation. It is recursion.

Platform Data: The Quiet Asset

Spotify possesses one of the largest behavioral and audio datasets in the history of recorded music that was licensed to them for an entirely different purpose — not just recordings, but stems, usage patterns, listener interactions, metadata, and performance analytics. If that corpus was used — formally or informally — as training input for this Spotify AI tool that magically appeared, then Spotify’s AI is built not just on music, but on the accumulated creative labor of millions of artists.

Yet creators were never asked. No notice. No explanation. No disclosure.

It must also be said that there is a related governance question. Daniel Ek’s investment in the defense-AI company Helsing has been widely reported, and Helsing’s systems like all advanced AI depend on large-scale model training, data pipelines, and machine learning infrastructure. Spotify supposedly has separately developed its own AI capabilities.

This raises a narrow but legitimate transparency question: is there any technological, data, personnel, or infrastructure overlap — any “crosstalk” — between AI development connected to Helsing’s automated weapons and the models deployed within Spotify? No public evidence currently suggests such interaction, and the companies operate in different domains, but the absence of disclosure leaves creators and stakeholders unable to assess whether safeguards, firewalls, and governance boundaries exist. Where powerful AI systems coexist under shared leadership influence, transparency about separation is as important as transparency about training itself.

The core issue is not simply licensing. It is transparency. A platform cannot convert custodial access into training rights while declining to explain where its training data came from.

That’s why this quote from MBW belies the usual exceptionally short sighted and moronic pablum from the Spotify executive team:

Asked on the call whether AI music platforms like Suno, Udio and Stability could themselves become DSPs and take share from Spotify, Norström pushed back: “No rightsholder is against our vision. We pretty much have the whole industry behind us.”

Of course, the premise of the question is one I have been wondering about myself—I assume that Suno and Udio fully intend to get into the DSP game. But Spotify’s executive blew right past that thoughtful question and answered a question he wasn’t asked which is very relevant to us: “We have pretty much the whole industry behind us.”

Oh, well, you actually don’t. And it would be very informative to know exactly what makes you say that since you have not disclosed anything about what ever the “it” is that you think the whole industry is behind.

Spotify’s Shadow Library Problem

Across the AI sector, a now-familiar pattern has emerged: Train first. Explain later — if ever.

The music industry has already seen this logic elsewhere: massive ingestion followed by retroactive justification. The question now is whether Spotify — a licensed, mainstream platform for its music service — is replicating that same pattern inside a closed AI ecosystem for which it has no licenses that have been announced.

So the question must be asked clearly:

Is Spotify’s AI derivative engine built entirely on disclosed, authorized training sources? Or is this simply a platform-contained version of shadow-library training?

Because if models ingested:

- Unlicensed recordings

- User-uploaded infringing material

- Catalog works without explicit training disclosure

- Performances lacking performer awareness

then AI derivatives risk becoming a backdoor exploitation mechanism operating outside traditional consent structures. A derivative engine built on undisclosed training provenance is not a creator tool. It is a liability gap. You know, kind of like Anna’s Archive.

A Direct Response to Gustav Söderström : What Training Would Actually Be Required?

Launching a true music generation or derivative engine would require massive, structured training, including:

1. Large-Scale Audio Corpus

Millions of full-length recordings across genres, eras, and production styles to teach models musical structure, timbre, arrangement, and performance nuance. Now where might those come from?

2. Stem-Level and Multitrack Data

Separated vocals, instruments, and production layers to allow recombination, remixing, and stylistic transformation.

3. Performance and Voice Modeling

Extensive vocal and instrumental recordings to capture phrasing, tone, articulation, and expressive characteristics — the very elements tied to performer identity.

4. Metadata and Behavioral Signals

Tempo, key, genre, mood, playlist placement, skip rates, and listener engagement data to guide model outputs toward commercially viable patterns.

5. Style and Similarity Encoding

Statistical mapping of musical characteristics enabling the system to generate “in the style of” outputs — the core mechanism behind derivative generation.

6. Iterative Retraining at Scale

Continuous ingestion and refinement using newly available recordings and platform data to improve fidelity and relevance.

7. Funding for all of the above

No generative music system of consequence can be built without enormous training exposure to real recordings and performances, and the expense.

Which returns us to the unresolved question:

Where did Spotify obtain that training data?

Because the issue is not whether Spotify could license training material. The issue is that Spotify has not explained — at all — how its training corpus was assembled.

Opacity is the problem.

Personhood Signals: Training on Recordings Is Training on People

Spotify can describe AI derivatives as “music tools,” but training on recordings is not just training on songs. Recordings contain personhood signals — the distinctive human identifiers embedded in performance and production that let a system learn who someone is (or can sound like), not merely what the composition is.

Personhood signals include (non-exhaustively):

- Voice identity markers (timbre, formants, prosody, accent, breath, idiosyncratic phrasing)

- Instrumental performance fingerprints (attack, vibrato, timing micro-variance, articulation, swing feel)

- Studio-musician signatures (the “nonfeatured” musicians who are often most identifiable to other musicians)

- Songwriter styles harmonic signatures, prosodic alignment, and lyric identity markers

- Production cues tied to an artist’s brand (adlibs, signature FX chains, cadence habits, recurring delivery patterns)

A modern generative system does not need to “copy Track X” to exploit these signals. It can abstract them — compress them into representations and weights — and then reconstruct outputs that trade on identity while claiming no particular recording was reproduced.

That’s why “licensing” isn’t the real threshold question here. The threshold questions are disclosure and permission:

- Did Spotify extract personhood signals from performances on its platform?

- Were those signals used to train systems that can output tokenized “sounds like” content?

- Are there credible guardrails that prevent the model from generating identity-proximate vocals/instrumental performance?

- And can creators verify any of this without having to sue first?

If Spotify’s training data provenance is opaque, then creators cannot know whether their identity-bearing performances were converted into model value which is the beginning of commoditization of music in AI. And when the platform monetizes “derivatives” (aka competing outputs) it risks building a new revenue layer (for Spotify) on top of the very human signals that performers were never asked to contribute.

The Asymmetry Problem

Spotify knows what it trained on. Creators do not. That asymmetry alone is a structural concern.

When a platform possesses complete knowledge of training inputs, model architecture, and monetization pathways — while creators lack even basic disclosure — the bargaining imbalance becomes absolute. Transparency is not optional in this context. It is the minimum condition for legitimacy.

Without it, creators cannot:

- Assert rights

- Evaluate consent

- Measure market displacement

- Understand whether their work shaped model behavior

- Or even know whether their identity, voice, or performance has already been absorbed into machine systems

As every bully knows, opacity redistributes power.

Derivatives or Displacement?

Spotify frames AI derivatives as creative empowerment — fans remixing, artists expanding, new revenue streams emerging. But the core economic question remains unanswered:

Are these tools supplementing human creation or substituting for it?

If derivative systems can generate stylistically consistent outputs from trained material, then the value captured by the model originates in human recordings — recordings whose role in training remains undisclosed. In that scenario, AI derivatives are not simply tools. They are synthetic competitors built from the creative DNA of the original artists. Kind of like MTV.

The distinction between assistive and substitutional AI is economic, not rhetorical.

The Question That Will Not Go Away

Spotify may continue to speak about AI derivatives in the language of opportunity, scale, and creative democratization. But none of that resolves the underlying issue:

What did they train on?

Until Spotify provides clear, verifiable disclosure about the origin of its training data — not merely licensing claims, but actual transparency — every derivative output carries an unresolved provenance problem. And in the age of generative systems, undisclosed training is a real risk to the artists who feed the beast.

Framed this way, the harm is not merely reproduction of a copyrighted recording; it’s the extraction and commercialization of identity-linked signals from performances potentially impacting featured and nonfeatured performers alike. Spotify’s failure (or refusal) to disclose training provenance becomes part of the harm, because it prevents anyone from assessing consent, compensation, or displacement.

And it makes it impossible to understand what value Spotify wants to license, much less whether we want them to do it at all or train our replacements.

Because maybe, just maybe, we don’t what another Spotify to build a business on our backs.